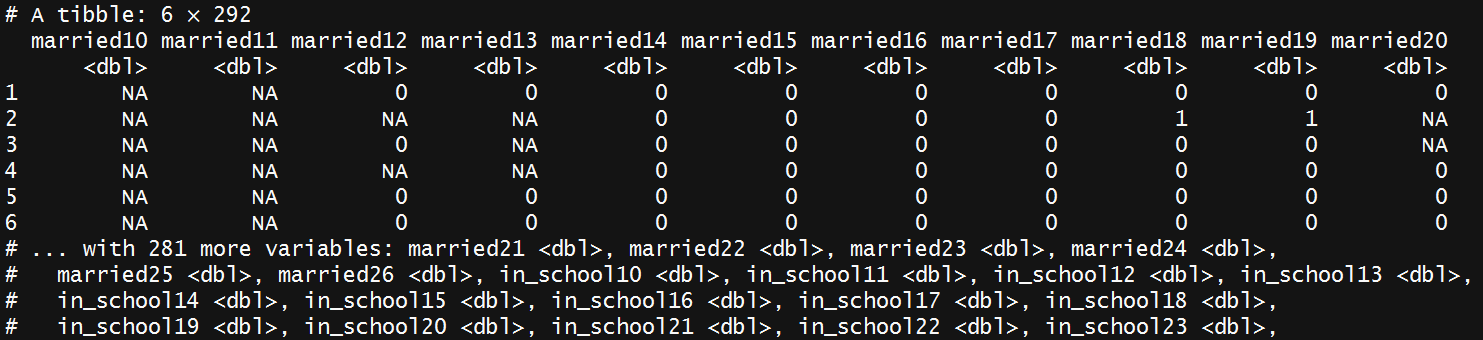

class: center, top, title-slide # Intermediate R ## UW Tacoma ### Charles Lanfear ### May 4, 2019<br>Updated: May 4, 2019 --- # Overview 1. Subsetting Data 2. Creating Variables 3. Summarizing Data 4. Tidying Data 5. Joining Data 6. Resources for Further Learning --- class: inverse # Setup --- # Packages Used Today This workshop focuses on using packages from the [`tidyverse`](https://www.tidyverse.org/). -- The `tidyverse` is a collection of R packages which share a design philosophy, syntax, and data structures. -- The `tidyverse` includes the most used packages in the R world: [`dplyr`](https://dplyr.tidyverse.org/) and [`ggplot2`](https://ggplot2.tidyverse.org/) -- You can install the *entire* `tidyverse` with the following: ```r install.packages("tidyverse") ``` -- We will also use the `gapminder` and `nycflights13` datasets: ```r install.packages("gapminder") install.packages("nycflights13") ``` --- class: inverse # Subsetting Data with `dplyr` --- # But First, Pipes: `%>%` `dplyr` uses the [`magrittr`](https://cran.r-project.org/web/packages/magrittr/vignettes/magrittr.html) forward pipe operator, usually called simply a **pipe**. We write pipes like **`%>%`** (`Ctrl+Shift+M` or `⌘ +Shift+M`). -- Pipes take the object on the *left* and apply the function on the *right*: `x %>% f(y) = f(x, y)`. Read out loud: "and then..." -- ```r library(dplyr) library(gapminder) gapminder %>% filter(country == "Canada") %>% head(2) ``` ``` ## # A tibble: 2 x 6 ## country continent year lifeExp pop gdpPercap ## <fct> <fct> <int> <dbl> <int> <dbl> ## 1 Canada Americas 1952 68.8 14785584 11367. ## 2 Canada Americas 1957 70.0 17010154 12490. ``` -- Pipes save us typing, make code readable, and allow chaining like above, so we use them *all the time* when manipulating data frames. --- # Using Pipes Pipes are clearest to read when you have each function on a separate line. -- ```r take_this_data %>% do_first_thing(with = this_value) %>% do_next_thing(using = that_value) %>% ... ``` -- Stuff to the left of the pipe is passed to the *first argument* of the function on the right. Other arguments go on the right in the function. -- If you ever find yourself piping a function where data are not the first argument, use `.` in the data argument instead. ```r gapminder %>% lm(pop ~ year, data = .) ``` --- # Pipe Assignment When creating a new object from the output of piped functions, you place the assignment operator *at the beginning*. ```r lm_pop_year <- gapminder %>% lm(pop ~ year, data = .) ``` No matter how long the chain of functions is, assignment is always done *at the top*. --- # `filter` Data Frames I used **`filter()`** earlier. We subset *rows* of data using logical conditions with `filter()`! ```r gapminder %>% filter(country == "Oman") %>% head(8) ``` ``` ## # A tibble: 8 x 6 ## country continent year lifeExp pop gdpPercap ## <fct> <fct> <int> <dbl> <int> <dbl> ## 1 Oman Asia 1952 37.6 507833 1828. ## 2 Oman Asia 1957 40.1 561977 2243. ## 3 Oman Asia 1962 43.2 628164 2925. ## 4 Oman Asia 1967 47.0 714775 4721. ## 5 Oman Asia 1972 52.1 829050 10618. ## 6 Oman Asia 1977 57.4 1004533 11848. ## 7 Oman Asia 1982 62.7 1301048 12955. ## 8 Oman Asia 1987 67.7 1593882 18115. ``` What is this doing? --- # Logical Operators Logical operators test boolean conditions and return `TRUE`, `FALSE`, or `NA`. -- `filter()` returns rows when the condition is `TRUE`. We used `==` for testing "equals": `country == "Oman"`. -- There are many other [logical operators](http://www.statmethods.net/management/operators.html): -- * `!=`: not equal to -- * `>`, `>=`, `<`, `<=`: less than, less than or equal to, etc. -- * `%in%`: used with checking equal to one of several values -- Or we can combine multiple logical conditions: * `&`: both conditions need to hold (AND) -- * `|`: at least one condition needs to hold (OR) -- * `!`: inverts a logical condition (`TRUE` becomes `FALSE`, `FALSE` becomes `TRUE`) --- # Multiple Conditions Example Let's say we want observations from Oman after 1980 and through 2000. -- ```r gapminder %>% filter(country == "Oman" & year > 1980 & year <= 2000 ) ``` ``` ## # A tibble: 4 x 6 ## country continent year lifeExp pop gdpPercap ## <fct> <fct> <int> <dbl> <int> <dbl> ## 1 Oman Asia 1982 62.7 1301048 12955. ## 2 Oman Asia 1987 67.7 1593882 18115. ## 3 Oman Asia 1992 71.2 1915208 18617. ## 4 Oman Asia 1997 72.5 2283635 19702. ``` --- # `%in%` Operator Common use case: Filter rows to things in some *set*. We can use `%in%` like `==` but for matching *any element* in the vector on its right<sup>1</sup>. ```r *former_yugoslavia <- c("Bosnia and Herzegovina", "Croatia", * "Macedonia", "Montenegro", "Serbia", "Slovenia") yugoslavia <- gapminder %>% filter(country %in% former_yugoslavia) tail(yugoslavia, 2) ``` ``` ## # A tibble: 2 x 6 ## country continent year lifeExp pop gdpPercap ## <fct> <fct> <int> <dbl> <int> <dbl> ## 1 Slovenia Europe 2002 76.7 2011497 20660. ## 2 Slovenia Europe 2007 77.9 2009245 25768. ``` .footnote[[1] The `c()` function is how we make **vectors** in R, which are an important data type.] --- ## Sorting: `arrange()` Along with filtering the data to see certain rows, we might want to sort it: ```r yugoslavia %>% arrange(year, desc(pop)) ``` ``` ## # A tibble: 60 x 6 ## country continent year lifeExp pop gdpPercap ## <fct> <fct> <int> <dbl> <int> <dbl> ## 1 Serbia Europe 1952 58.0 6860147 3581. ## 2 Croatia Europe 1952 61.2 3882229 3119. ## 3 Bosnia and Herzegovina Europe 1952 53.8 2791000 974. ## 4 Slovenia Europe 1952 65.6 1489518 4215. ## 5 Montenegro Europe 1952 59.2 413834 2648. ## 6 Serbia Europe 1957 61.7 7271135 4981. ## 7 Croatia Europe 1957 64.8 3991242 4338. ## 8 Bosnia and Herzegovina Europe 1957 58.4 3076000 1354. ## 9 Slovenia Europe 1957 67.8 1533070 5862. ## 10 Montenegro Europe 1957 61.4 442829 3682. ## # ... with 50 more rows ``` The data are sorted by ascending `year` and descending `pop`. --- ## Keeping Columns: `select()` Not only can we subset rows, but we can include specific columns (and put them in the order listed) using **`select()`**. ```r yugoslavia %>% select(country, year, pop) %>% head(4) ``` ``` ## # A tibble: 4 x 3 ## country year pop ## <fct> <int> <int> ## 1 Bosnia and Herzegovina 1952 2791000 ## 2 Bosnia and Herzegovina 1957 3076000 ## 3 Bosnia and Herzegovina 1962 3349000 ## 4 Bosnia and Herzegovina 1967 3585000 ``` --- ## Dropping Columns: `select()` We can instead drop only specific columns with `select()` using `-` signs: ```r yugoslavia %>% select(-continent, -pop, -lifeExp) %>% head(4) ``` ``` ## # A tibble: 4 x 3 ## country year gdpPercap ## <fct> <int> <dbl> ## 1 Bosnia and Herzegovina 1952 974. ## 2 Bosnia and Herzegovina 1957 1354. ## 3 Bosnia and Herzegovina 1962 1710. ## 4 Bosnia and Herzegovina 1967 2172. ``` --- ## Helper Functions for `select()` `select()` has a variety of helper functions like `starts_with()`, `ends_with()`, and `contains()`, or can be given a range of continguous columns `startvar:endvar`. See `?select` for details. These are very useful if you have a "wide" data frame with column names following a pattern or ordering.  ```r DYS %>% select(starts_with("married")) DYS %>% select(ends_with("18")) ``` --- ## Renaming Columns with `select()` We can rename columns using `select()`, but that drops everything that isn't mentioned: ```r yugoslavia %>% select(Life_Expectancy = lifeExp) %>% head(4) ``` ``` ## # A tibble: 4 x 1 ## Life_Expectancy ## <dbl> ## 1 53.8 ## 2 58.4 ## 3 61.9 ## 4 64.8 ``` --- ### Safer: Rename Columns with `rename()` **`rename()`** renames variables using the same syntax as `select()` without dropping unmentioned variables. ```r yugoslavia %>% select(country, year, lifeExp) %>% rename(Life_Expectancy = lifeExp) %>% head(4) ``` ``` ## # A tibble: 4 x 3 ## country year Life_Expectancy ## <fct> <int> <dbl> ## 1 Bosnia and Herzegovina 1952 53.8 ## 2 Bosnia and Herzegovina 1957 58.4 ## 3 Bosnia and Herzegovina 1962 61.9 ## 4 Bosnia and Herzegovina 1967 64.8 ``` --- class: inverse # Creating Variables --- ## `mutate()` In `dplyr`, you can add new columns to a data frame using **`mutate()`**. ```r yugoslavia %>% filter(country == "Serbia") %>% select(year, pop, lifeExp) %>% * mutate(pop_million = pop / 1000000, * life_exp_past_40 = lifeExp - 40) %>% head(5) ``` ``` ## # A tibble: 5 x 5 ## year pop lifeExp pop_million life_exp_past_40 ## <int> <int> <dbl> <dbl> <dbl> ## 1 1952 6860147 58.0 6.86 18.0 ## 2 1957 7271135 61.7 7.27 21.7 ## 3 1962 7616060 64.5 7.62 24.5 ## 4 1967 7971222 66.9 7.97 26.9 ## 5 1972 8313288 68.7 8.31 28.7 ``` .footnote[Note you can create multiple variables in a single `mutate()` call by separating the expressions with commas.] --- # `ifelse()` A common function used in `mutate()` (and in general in R programming) is **`ifelse()`**. It returns a vector of values depending on a logical test. ```r ifelse(test = x==y, yes = first_value , no = second_value) ``` Output from `ifelse()` if `x==y` is... * `TRUE`: `first_value` - the value for `yes =` * `FALSE`: `second_value` - the value for `no = ` * `NA`: `NA` - because you can't test for NA with an equality! -- For example: ```r example <- c(1, 0, NA, -2) ifelse(example > 0, "Positive", "Not Positive") ``` ``` ## [1] "Positive" "Not Positive" NA "Not Positive" ``` --- # `ifelse()` Example ```r yugoslavia %>% mutate(short_country = ifelse(country == "Bosnia and Herzegovina", "B and H", as.character(country))) %>% select(short_country, year, pop) %>% arrange(year, short_country) %>% head(3) ``` ``` ## # A tibble: 3 x 3 ## short_country year pop ## <chr> <int> <int> ## 1 B and H 1952 2791000 ## 2 Croatia 1952 3882229 ## 3 Montenegro 1952 413834 ``` Read this as "For each row, if country equals 'Bosnia and Herzegovina', make `short_country` equal to 'B and H', otherwise make it equal to that row's value of `country`." This is a simple way to change some values but not others! --- # `case_when()` **`case_when()`** performs multiple `ifelse()` operations at the same time. `case_when()` allows you to create a new variable with values based on multiple logical statements. This is useful for making categorical variables or variables from combinations of other variables. .smallish[ ```r gapminder %>% mutate(gdpPercap_ordinal = case_when( gdpPercap < 700 ~ "low", gdpPercap >= 700 & gdpPercap < 800 ~ "moderate", TRUE ~ "high" )) %>% # Value when all other statements are FALSE slice(6:9) # get rows 6 through 9 ``` ``` ## # A tibble: 4 x 7 ## country continent year lifeExp pop gdpPercap gdpPercap_ordinal ## <fct> <fct> <int> <dbl> <int> <dbl> <chr> ## 1 Afghanistan Asia 1977 38.4 14880372 786. moderate ## 2 Afghanistan Asia 1982 39.9 12881816 978. high ## 3 Afghanistan Asia 1987 40.8 13867957 852. high ## 4 Afghanistan Asia 1992 41.7 16317921 649. low ``` ] --- class: inverse # Summarizing Data --- ## General Aggregation: `summarize()` **`summarize()`** takes your column(s) of data and computes something using every row: * Count how many rows there are * Calculate the mean * Compute the sum * Obtain a minimum or maximum value You can use any function in `summarize()` that aggregates *multiple values* into a *single value* (like `sd()`, `mean()`, or `max()`). --- # `summarize()` Example For the year 1982, let's get the *number of observations*, *total population*, *mean life expectancy*, and *range of life expectancy* for former Yugoslavian countries. ```r yugoslavia %>% filter(year == 1982) %>% summarize(n_obs = n(), total_pop = sum(pop), mean_life_exp = mean(lifeExp), range_life_exp = max(lifeExp) - min(lifeExp)) ``` ``` ## # A tibble: 1 x 4 ## n_obs total_pop mean_life_exp range_life_exp ## <int> <int> <dbl> <dbl> ## 1 5 20042685 71.3 3.94 ``` These new variables are calculated using *all of the rows* in `yugoslavia` --- # Avoiding Repetition: ### `summarize_at()` Maybe you need to calculate the mean and standard deviation of a bunch of columns. With **`summarize_at()`**, put the variables to compute over first `vars()` (using `select()` syntax) and put the functions to use in `funs()` after. ```r yugoslavia %>% filter(year == 1982) %>% summarize_at(vars(lifeExp, pop), list(~mean(.), ~sd(.))) ``` ``` ## # A tibble: 1 x 4 ## lifeExp_mean pop_mean lifeExp_sd pop_sd ## <dbl> <dbl> <dbl> <dbl> ## 1 71.3 4008537 1.60 3237282. ``` Note it automatically names the summarized variables based on the functions used to summarize. --- # Avoiding Repetition ### Other functions: There are additional `dplyr` functions similar to `summarize_at()`: * `summarize_all()` and `mutate_all()` summarize / mutate *all* variables sent to them in the same way. For instance, getting the mean and standard deviation of an entire dataframe: ```r dataframe %>% summarize_all(funs(mean, sd)) ``` * `summarize_if()` and `mutate_if()` summarize / mutate all variables that satisfy some logical condition. For instance, summarizing every numeric column in a dataframe at once: ```r dataframe %>% summarize_if(is.numeric, funs(mean, sd)) ``` You can use all of these to avoid typing out the same code repeatedly! --- # `group_by()` The special function **`group_by()`** changes how subsequent functions operate on the data, most importantly `summarize()`. Functions after `group_by()` are computed *within each group* as defined by unique valus of the variables given, rather than over all rows at once. Typically the variables you group by will be integers, factors, or characters, and *not continuous real values*. --- `group_by()` example ```r yugoslavia %>% * group_by(year) %>% summarize(num_countries = n_distinct(country), total_pop = sum(pop), total_gdp_per_cap = sum(pop*gdpPercap)/total_pop) %>% head(5) ``` ``` ## # A tibble: 5 x 4 ## year num_countries total_pop total_gdp_per_cap ## <int> <int> <int> <dbl> ## 1 1952 5 15436728 3030. ## 2 1957 5 16314276 4187. ## 3 1962 5 17099107 5257. ## 4 1967 5 17878535 6656. ## 5 1972 5 18579786 8730. ``` Because we did `group_by()` with `year` then used `summarize()`, we get *one row per value of `year`*! Each value of year is its own **group**! --- ## Window Functions Grouping can also be used with `mutate()` or `filter()` to give rank orders within a group, lagged values, and cumulative sums. You can read more about window functions in this [vignette](https://cran.r-project.org/web/packages/dplyr/vignettes/window-functions.html). ```r yugoslavia %>% select(country, year, pop) %>% filter(year >= 2002) %>% group_by(country) %>% mutate(lag_pop = lag(pop, order_by = year), pop_chg = pop - lag_pop) %>% head(4) ``` ``` ## # A tibble: 4 x 5 ## # Groups: country [2] ## country year pop lag_pop pop_chg ## <fct> <int> <int> <int> <int> ## 1 Bosnia and Herzegovina 2002 4165416 NA NA ## 2 Bosnia and Herzegovina 2007 4552198 4165416 386782 ## 3 Croatia 2002 4481020 NA NA ## 4 Croatia 2007 4493312 4481020 12292 ``` --- class: inverse # Tidying Data --- # Initial Spot Checks .smallish[ First things to check after loading new data: ] -- .smallish[ * Did the last rows/columns from the original file make it in? + May need to use different package or manually specify range ] -- .smallish[ * Are the column names in good shape? + Modify a `col_names=` argument or fix with `rename()` ] -- .smallish[ * Are there "decorative" blank rows or columns to remove? + `filter()` or `select()` out those rows/columns ] -- .smallish[ * How are missing values represented: `NA`, `" "` (blank), `.` (period), `999`? + Use `mutate()` with `ifelse()` to fix these (perhaps *en masse* with looping) ] -- .smallish[ * Are there character data (e.g. ZIP codes with leading zeroes) being incorrectly represented as numeric or vice versa? + Modify `col_types=` argument, or use `mutate()` and `as.numeric()` ] --- # Slightly Messy Data | **Program** | **Female** | **Male** | |-----------------|-----------:|---------:| | Evans School | 10 | 6 | | Arts & Sciences | 5 | 6 | | Public Health | 2 | 3 | | Other | 5 | 1 | -- * What is an observation? + A group of students from a program of a given gender * What are the variables? + Program, Gender, Count * What are the values? + Program: Evans School, Arts & Sciences, Public Health, Other + Gender: Female, Male -- **in the column headings, not its own column!** + Count: **spread over two columns!** --- # Tidy Version | **Program** | **Gender** | **Count** | |-----------------|-----------:|---------:| | Evans School | Female | 10 | | Evans School | Male | 6 | | Arts & Sciences | Female | 5 | | Arts & Sciences | Male | 6 | | Public Health | Female | 2 | | Public Health | Male | 3 | | Other | Female | 5 | | Other | Male | 1 | Each variable is a column. Each observation is a row. Ready to throw into `ggplot()` or a model! --- # Billboard Data We're going to work with some *ugly* data: *The Billboard Hot 100 for the year 2000*. We can load it like so: ```r library(readr) # Contains read_csv() billboard_2000_raw <- read_csv(file = "https://github.com/clanfear/Intermediate_R_Workshop/raw/master/data/billboard.csv", * col_types = paste(c("icccD", rep("i", 76)), collapse="")) ``` .footnote[`col_types=` is used to specify column types. [See here for details.](https://clanfear.github.io/CSSS508/Lectures/Week5/CSSS508_week5_data_import_export_cleaning.html#29)] --- # Billboard is Just Ugly-Messy .small[ | year | artist | track | time | date.entered | wk1 | wk2 | wk3 | wk4 | wk5 | |:----:|:-------------------:|:-----------------------:|:----:|:------------:|:---:|:---:|:---:|:---:|:---:| | 2000 | 2 Pac | Baby Don't Cry (Keep... | 4:22 | 2000-02-26 | 87 | 82 | 72 | 77 | 87 | | 2000 | 2Ge+her | The Hardest Part Of ... | 3:15 | 2000-09-02 | 91 | 87 | 92 | NA | NA | | 2000 | 3 Doors Down | Kryptonite | 3:53 | 2000-04-08 | 81 | 70 | 68 | 67 | 66 | | 2000 | 3 Doors Down | Loser | 4:24 | 2000-10-21 | 76 | 76 | 72 | 69 | 67 | | 2000 | 504 Boyz | Wobble Wobble | 3:35 | 2000-04-15 | 57 | 34 | 25 | 17 | 17 | | 2000 | 98^0 | Give Me Just One Nig... | 3:24 | 2000-08-19 | 51 | 39 | 34 | 26 | 26 | | 2000 | A*Teens | Dancing Queen | 3:44 | 2000-07-08 | 97 | 97 | 96 | 95 | 100 | | 2000 | Aaliyah | I Don't Wanna | 4:15 | 2000-01-29 | 84 | 62 | 51 | 41 | 38 | | 2000 | Aaliyah | Try Again | 4:03 | 2000-03-18 | 59 | 53 | 38 | 28 | 21 | | 2000 | Adams, Yolanda | Open My Heart | 5:30 | 2000-08-26 | 76 | 76 | 74 | 69 | 68 | | 2000 | Adkins, Trace | More | 3:05 | 2000-04-29 | 84 | 84 | 75 | 73 | 73 | | 2000 | Aguilera, Christina | Come On Over Baby (A... | 3:38 | 2000-08-05 | 57 | 47 | 45 | 29 | 23 | ] Week columns continue up to `wk76`! --- # Billboard * What are the **observations** in the data? -- + Week since entering the Billboard Hot 100 per song -- * What are the **variables** in the data? -- + Year, artist, track, song length, date entered Hot 100, week since first entered Hot 100 (**spread over many columns**), rank during week (**spread over many columns**) -- * What are the **values** in the data? -- + e.g. 2000; 3 Doors Down; Kryptonite; 3 minutes 53 seconds; April 8, 2000; Week 3 (**stuck in column headings**); rank 68 (**spread over many columns**) --- # Tidy Data **Tidy data** (aka "long data") are such that: -- 1. The values for a single observation are in their own row. -- 2. The values for a single variable are in their own column. -- 3. The observations are all of the same nature. -- Why do we want tidy data? * Easier to understand many rows than many columns * Required for plotting in `ggplot2` * Required for many types of statistical procedures (e.g. hierarchical or mixed effects models) * Fewer confusing variable names * Fewer issues with missing values and "imbalanced" repeated measures data --- # `tidyr` The `tidyr` package provides functions to tidy up data, similar to `reshape` in Stata or `varstocases` in SPSS. Key functions: -- * **`gather()`**: takes a set of columns and rotates them down to make two new columns (which you can name yourself): * A `key` that stores the original column names * A `value` with the values in those original columns -- * **`spread()`**: inverts `gather()` by taking two columns and rotating them up into multiple columns -- * **`separate()`**: pulls apart one column into multiple columns (common with `gather`ed data where values had been embedded in column names) * `extract_numeric()` does a simple version of this for the common case when you just want grab the number part -- * **`extract()`** for spreading a column into multiple *sets* of columns. * See [Hadley's response to this question](https://stackoverflow.com/questions/25925556/gather-multiple-sets-of-columns) for an example. --- # `gather()` Let's use `gather()` to get the week and rank variables out of their current layout into two columns (big increase in rows, big drop in columns): ```r library(tidyr) billboard_2000 <- billboard_2000_raw %>% * gather(key = week, value = rank, starts_with("wk")) dim(billboard_2000) ``` ``` ## [1] 24092 7 ``` `starts_with()` and other helper functions from `dplyr::select()` work here too. We could instead use: `gather(key = week, value = rank, wk1:wk76)` to pull out these contiguous columns. --- # `gather`ed Weeks .smallish[ ```r head(billboard_2000) ``` ``` ## # A tibble: 6 x 7 ## year artist track time date.entered week rank ## <int> <chr> <chr> <chr> <date> <chr> <int> ## 1 2000 2 Pac Baby Don't Cry (Keep... 4:22 2000-02-26 wk1 87 ## 2 2000 2Ge+her The Hardest Part Of ... 3:15 2000-09-02 wk1 91 ## 3 2000 3 Doors Down Kryptonite 3:53 2000-04-08 wk1 81 ## 4 2000 3 Doors Down Loser 4:24 2000-10-21 wk1 76 ## 5 2000 504 Boyz Wobble Wobble 3:35 2000-04-15 wk1 57 ## 6 2000 98^0 Give Me Just One Nig... 3:24 2000-08-19 wk1 51 ``` ] Now we have a single week column! --- # Gathering Better? ```r summary(billboard_2000$rank) ``` ``` ## Min. 1st Qu. Median Mean 3rd Qu. Max. NA's ## 1.00 26.00 51.00 51.05 76.00 100.00 18785 ``` This is an improvement, but we don't want to keep the 18785 rows with missing ranks (i.e. observations for weeks since entering the Hot 100 that the song was no longer on the Hot 100). --- # Gathering Better: `na.rm` The argument `na.rm = TRUE` to `gather()` will remove rows with missing ranks. ```r billboard_2000 <- billboard_2000_raw %>% gather(key = week, value = rank, starts_with("wk"), * na.rm = TRUE) summary(billboard_2000$rank) ``` ``` ## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 1.00 26.00 51.00 51.05 76.00 100.00 ``` --- # `separate()` The track length column isn't analytically friendly. Let's convert it to a number rather than the character (minutes:seconds) format: ```r billboard_2000 <- billboard_2000 %>% separate(time, into = c("minutes", "seconds"), * sep = ":", convert = TRUE) %>% mutate(length = minutes + seconds / 60) %>% select(-minutes, -seconds) summary(billboard_2000$length) ``` ``` ## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 2.600 3.667 3.933 4.031 4.283 7.833 ``` `sep = :` tells `separate()` to split the column into two where it finds a colon (`:`). Then we add `seconds / 60` to `minutes` to produce a numeric `length` in minutes. --- # `parse_number()` `tidyr` provides a convenience function to grab just the numeric information from a column that mixes text and numbers: ```r billboard_2000 <- billboard_2000 %>% * mutate(week = parse_number(week)) summary(billboard_2000$week) ``` ``` ## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 1.00 5.00 10.00 11.47 16.00 65.00 ``` For more sophisticated conversion or pattern checking, you'll need to use string parsing (to be covered in week 8). --- # `spread()` Motivation `spread()` is the opposite of `gather()`, which you use if you have data for the same observation taking up multiple rows. -- Example of data that we probably want to spread (unless we want to plot each statistic in its own facet): .small[ | **Group** | **Statistic** | **Value** | |-------|-----------|------:| | A | Mean | 1.28 | | A | Median | 1.0 | | A | SD | 0.72 | | B | Mean | 2.81 | | B | Median | 2 | | B | SD | 1.33 | ] A common cue to use `spread()` is having measurements of different quantities in the same column. --- # Before `spread()` .smallish[ ```r (too_long_data <- data.frame(Group = c(rep("A", 3), rep("B", 3)), Statistic = rep(c("Mean", "Median", "SD"), 2), Value = c(1.28, 1.0, 0.72, 2.81, 2, 1.33))) ``` ``` ## Group Statistic Value ## 1 A Mean 1.28 ## 2 A Median 1.00 ## 3 A SD 0.72 ## 4 B Mean 2.81 ## 5 B Median 2.00 ## 6 B SD 1.33 ``` ] --- # After `spread()` ```r (just_right_data <- too_long_data %>% spread(key = Statistic, value = Value)) ``` ``` ## Group Mean Median SD ## 1 A 1.28 1 0.72 ## 2 B 2.81 2 1.33 ``` --- # Charts of 2000: Data Prep Let's look at songs that hit #1 at some point and look how they got there versus songs that did not: ```r # find best rank for each song best_rank <- billboard_2000 %>% group_by(artist, track) %>% * summarize(min_rank = min(rank), weeks_at_1 = sum(rank == 1)) %>% mutate(`Peak rank` = ifelse(min_rank == 1, "Hit #1", "Didn't #1")) # merge onto original data billboard_2000 <- billboard_2000 %>% left_join(best_rank, by = c("artist", "track")) ``` .footnote[Note that because the "highest" rank is *numerically lowest* (1), we are summarizing with `min()`.] --- ## Which Were #1 the Most Weeks? ```r billboard_2000 %>% select(artist, track, weeks_at_1) %>% distinct(artist, track, weeks_at_1) %>% arrange(desc(weeks_at_1)) %>% head(7) ``` ``` ## # A tibble: 7 x 3 ## artist track weeks_at_1 ## <chr> <chr> <int> ## 1 Destiny's Child Independent Women Pa... 11 ## 2 Santana Maria, Maria 10 ## 3 Aguilera, Christina Come On Over Baby (A... 4 ## 4 Madonna Music 4 ## 5 Savage Garden I Knew I Loved You 4 ## 6 Destiny's Child Say My Name 3 ## 7 Iglesias, Enrique Be With You 3 ``` --- # Getting Usable Dates We have the date the songs first charted, but not the dates for later weeks. We can calculate these now that the data are tidy: ```r billboard_2000 <- billboard_2000 %>% * mutate(date = date.entered + (week - 1) * 7) billboard_2000 %>% arrange(artist, track, week) %>% select(artist, date.entered, week, date, rank) %>% head(4) ``` ``` ## # A tibble: 4 x 5 ## artist date.entered week date rank ## <chr> <date> <dbl> <date> <int> ## 1 2 Pac 2000-02-26 1 2000-02-26 87 ## 2 2 Pac 2000-02-26 2 2000-03-04 82 ## 3 2 Pac 2000-02-26 3 2000-03-11 72 ## 4 2 Pac 2000-02-26 4 2000-03-18 77 ``` This works because `date` objects are in units of days—we just add 7 days per week to the start date. --- class: inverse ##Joining Data --- ## When Do We Need to Join Data? * Want to make columns using criteria too complicated for `ifelse()` or `case_when()` * We can work with small sets of variables then combine them back together. * Combine data stored in separate data sets: e.g. UW registrar information with police stop records. * Often large surveys are broken into different data sets for each level (e.g. household, individual, neighborhood) --- ## Joining in Concept We need to think about the following when we want to merge data frames `A` and `B`: * Which *rows* are we keeping from each data frame? * Which *columns* are we keeping from each data frame? * Which variables determine whether rows *match*? --- ## Join Types: Rows and columns kept There are many types of joins<sup>1</sup>... * `A %>% left_join(B)`: keep all rows from `A`, matched with `B` wherever possible (`NA` when not), keep columns from both `A` and `B` * `A %>% right_join(B)`: keep all rows from `B`, matched with `A` wherever possible (`NA` when not), keep columns from both `A` and `B` * `A %>% inner_join(B)`: keep only rows from `A` and `B` that match, keep columns from both `A` and `B` * `A %>% full_join(B)`: keep all rows from both `A` and `B`, matched wherever possible (`NA` when not), keep columns from both `A` and `B` * `A %>% semi_join(B)`: keep rows from `A` that match rows in `B`, keep columns from only `A` * `A %>% anti_join(B)`: keep rows from `A` that *don't* match a row in `B`, keep columns from only `A` .pull-right[.footnote[[1] Usually `left_join()` does the job.]] --- ## Matching Criteria We say rows should *match* because they have some columns containing the same value. We list these in a `by = ` argument to the join. Matching Behavior: * No `by`: Match using all variables in `A` and `B` that have identical names -- * `by = c("var1", "var2", "var3")`: Match on identical values of `var1`, `var2`, and `var3` in both `A` and `B` -- * `by = c("Avar1" = "Bvar1", "Avar2" = "Bvar2")`: Match identical values of `Avar1` variable in `A` to `Bvar1` variable in `B`, and `Avar2` variable in `A` to `Bvar2` variable in `B` Note: If there are multiple matches, you'll get *one row for each possible combination* (except with `semi_join()` and `anti_join()`). Need to get more complicated? Break it into multiple operations. --- ## `nycflights13` Data We'll use data in the [`nycflights13` package](https://cran.r-project.org/web/packages/nycflights13/nycflights13.pdf). ```r library(nycflights13) ``` It includes five dataframes, some of which contain missing data (`NA`): * `flights`: flights leaving JFK, LGA, or EWR in 2013 * `airlines`: airline abbreviations * `airports`: airport metadata * `planes`: airplane metadata * `weather`: hourly weather data for JFK, LGA, and EWR Note these are *separate data frames*, each needing to be *loaded separately*: ```r data(flights) data(airlines) data(airports) # and so on... ``` --- ## Join Example Who manufactures the planes that flew to SeaTac? ```r flights %>% filter(dest == "SEA") %>% select(tailnum) %>% * left_join(planes %>% select(tailnum, manufacturer), by = "tailnum") %>% count(manufacturer) %>% # Count observations by manufacturer arrange(desc(n)) # Arrange data descending by count ``` ``` ## # A tibble: 6 x 2 ## manufacturer n ## <chr> <int> ## 1 BOEING 2659 ## 2 AIRBUS 475 ## 3 AIRBUS INDUSTRIE 394 ## 4 <NA> 391 ## 5 BARKER JACK L 2 ## 6 CIRRUS DESIGN CORP 2 ``` Note you can perform operations on the data inside functions such as `left_join()` and the *output* will be used by the function. --- # Visualization Preview The next workshop will focus on visualization using `ggplot2`. We could visualize the data we worked with today to understand it better. --- # Charts of 2000: `ggplot2` ```r library(ggplot2) billboard_trajectories <- ggplot(data = billboard_2000, aes(x = week, y = rank, group = track, color = `Peak rank`) ) + geom_line(aes(size = `Peak rank`), alpha = 0.4) + # rescale time: early weeks more important scale_x_log10(breaks = seq(0, 70, 10)) + scale_y_reverse() + # want rank 1 on top, not bottom theme_classic() + xlab("Week") + ylab("Rank") + scale_color_manual("Peak Rank", values = c("black", "red")) + scale_size_manual("Peak Rank", values = c(0.25, 1)) + theme(legend.position = c(0.90, 0.25), legend.background = element_rect(fill="transparent")) ``` --- # Charts of 2000: Beauty! <!-- --> Observation: There appears to be censoring around week 20 for songs falling out of the top 50 that I'd want to follow up on. --- # Resources * [UW CSSS508](https://clanfear.github.io/CSSS508/): My University of Washington Introduction to R course which forms the basis for this workshop. All content including lecture videos is freely available. * [R for Data Science](http://r4ds.had.co.nz/) online textbook by Garrett Grolemund and Hadley Wickham. One of many good R texts available, but importantly it is free and focuses on the [`tidyverse`](http://tidyverse.org/) collection of R packages which are the modern standard for data manipulation and visualization in R. * [Advanced R](http://adv-r.had.co.nz/) online textbook by Hadley Wickham. A great source for more in-depth and advanced R programming. * [DataCamp](https://www.datacamp.com/): A source for interactive R tutorials (some free of charge). * [`swirl`](http://swirlstats.com/students.html): Interactive tutorials inside R. * [Useful RStudio cheatsheets](https://www.rstudio.com/resources/cheatsheets/) on R Markdown, RStudio shortcuts, etc.